Metadata Documentation and Accessibility

Administrative data can be an excellent source of information for use in research and evaluating impact of programs. The purpose of this section is to provide a step-by-step guide for documenting administrative datasets in a standardized format called a metadata catalog. This format can be self-administered by staff within the department or the agency or compiled by non-departmental staff tasked with the cataloging activity with inputs from departmental staff.

2.1: Why document department datasets?

There are numerous challenges in identifying the necessary digital infrastructure to enable interlinkages or interoperability within existing data systems. The combining and analyzing data to detect patterns and generate insights enhance the potential of use of data for policy decisions. Interoperability is the extent to which the data are capable of undergoing integration or of being integrated with other datasets. The effectiveness of administrative data are amplified when datasets can be linked with one another. The ability to link datasets, such as birth registry to immunization data, anganwadi enrolment data to school data, can enable policy makers to quickly identify beneficiaries who may fall through the cracks while transitioning from one stage of life to another.

Challenge 1: Datasets are collected and stored in different formats and locations, at varying digitization levels (semi-structured to structured data).

For instance, social sectors such as health, education, social welfare etc. have many beneficiary focused schemes that capture information on services and benefits delivered to individuals, typically entered by a frontline worker into mobile applications. Other datasets within these same departments could be at the institutional level (health centers, hospitals, schools, anganwadi centers etc.). There are departments such as Food, Revenue, and Taxation which primarily have registration and transactional databases, automatically generated through digitized processes (online applications, point of sale devices, SMS, etc.), whereas Land revenue, Pollution Boards, Power, and other infrastructure departments have diverse area-based and asset information such as road networks, satellite images of land and water bodies, electricity stations, air and water pollution levels etc. Finally, there may be Government-wide information systems such as call records from grievance redressal mechanisms, bills and financial transactions from Treasury, human resource data, census data and others.

A combination of various formats of these datasets are required to be interlinked and joined together to draw any meaning or for any further analysis. Governments and agencies are making strides towards bridging siloed datasets and connecting them to better inform decisions and deliver public services effectively.

Challenge 2: Lack of information on what data are available is another challenge in facilitating the use of the data. Even when information is available it is often scattered, not maintained in standardized formats or limited to a few persons.

In order to facilitate greater use of data, It is important not only to know what data-sets are available, but also the details about the data collection process, management, storage and the existing use.

Solution: A central and comprehensive metadata catalog would help first identify all the available administrative and secondary data sources available with the government. Documenting datasets in a standardized format (or a metadata catalog) helps to address the above challenges. Such a central and routinely updated metadata catalog would enable ideas for linking across datasets and innovative use of data contained therein for planning and decision making purposes. With increasing focus on open data and data sharing policies, the data catalog can then be made accessible for external use with a process for accessing data. The feedback from users can further be used to update the documentation and improve data quality and accessibility.

Cataloging datasets will allow the department, partner and agency to understand the magnitude of the data that is generated and owned, and a standardized format of data across various departments within the organization or government will facilitate interoperability between departments

2.2: What is a Metadata Catalog (MDC)?

Metadata is often simply defined as “data about data.” Metadata provides the information necessary to use a particular source of data effectively, and may include information about its source, structure, underlying methodology, topical, geographic and/or temporal coverage, license, when it was last updated and how it is maintained. An MDC is a standardized format for collation of information on all existing data-sets available with the department. For each data-set it captures description of basic information such as key data contents or fields, process of data generation, rules to access, storage, and types of data available and potential usability. These data-sets can be closed i.e. accessible only by the department or publicly available; they can be administrative data or survey data. A few examples of public data catalogs include data.gov.in, World Bank datasets etc.

2.3: Populating a Metadata Data Catalog

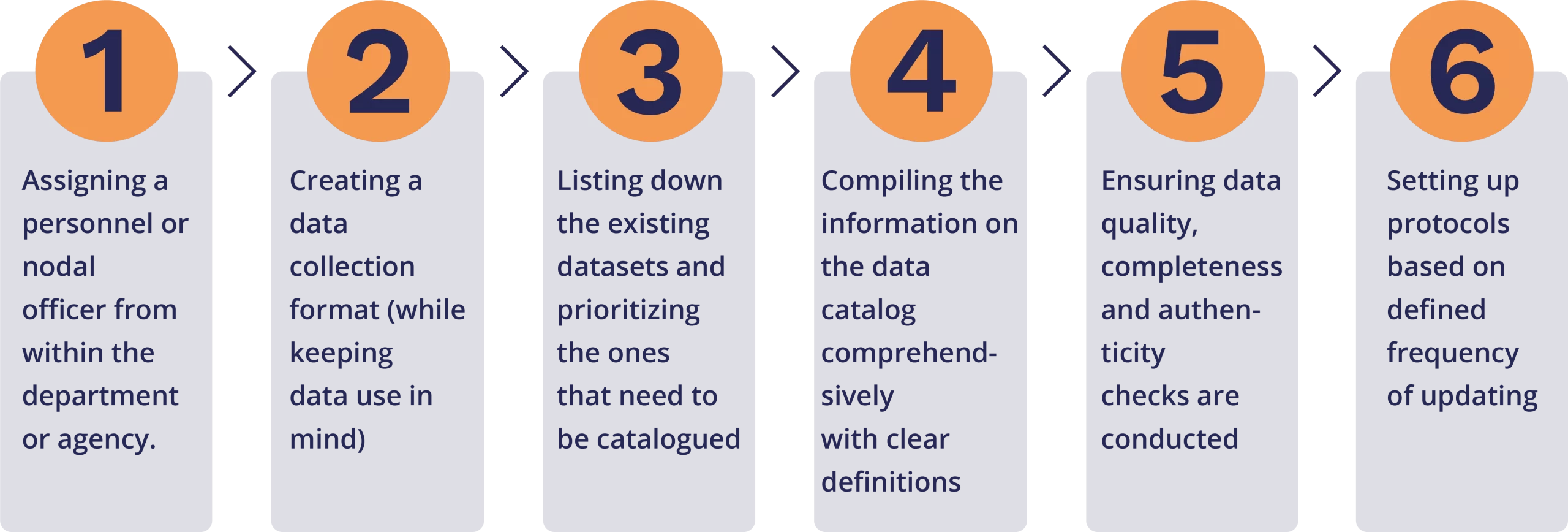

This section provides a step-by-step guide on how to create a metadata catalog (MDC) for your purpose.

Flowchart: The Metadata Cataloging Process

Step 1: Assigning a personnel or a nodal officer from within the department or agency

Each department or agency should identify a nodal personnel who will be responsible for identifying the data-sets maintained/owned by the department and ensuring all relevant information is populated in the MDC. Usually, the person most familiar with the data-set should be assigned to populate the MDC and ensure the entire compilation process. This could be the IT personnel; program officer or any other staff member.

Step 2: Creating a data collection format (while keeping data use in mind)

The critical step while cataloging the datasets is to develop a standardized format for collating all information. This step helps organize all information into meaningful categories. These minimal sets of indicators or tags would help create a master index to all the key datasets, will contain information about the datasets, and help prioritize the datasets required to be further cataloged. This tabular format can be designed collaboratively by the nodal officer who can work with multiple people or teams who have the knowledge about the individual datasets being entered into the format (A data catalog template is shared here for reference)

For instance, information could be classified, namely into,

- Basic Information (scheme name, title of the dataset),

- Data contents related information (granular unit of data, scale of data, personal identifying fields and so on),

- Data collection related information (point of entry of data, eligibility for inclusion, frequency of data collection and so on),

- Data quality related information (data validation/ measures taken, format in which data are available),

- Data storage related information (database management system used, list of stakeholders involved in data management and their roles), and

- Data use related information (who uses the data and for what purpose, frequency of generation of insights, actions taken using the variable in the dataset)

Step 3: Listing down the existing datasets and prioritizing the ones that need to be cataloged

The next step is to gather all information regarding the schemes and programs implemented by the department along with the contact information of nodal officers for each scheme in the tabular or spreadsheet format. Identifying which datasets need to be cataloged can be done on the basis of the usability, ownership and the depth of the dataset. For example, the Tamil Nadu e-Governance Agency (TNeGA) is developing a State Family Database(SFDB) of beneficiaries across Tamil Nadu in an effort to help departments better formulate and target social welfare schemes. The SFDB is being created by consolidating beneficiary data from multiple departments for the social welfare schemes. As each of the departments will have their own data collection and storage processes, efforts are made to understand how the information can be linked using common unique identifiers. The catalog will then be shared with departments to help them correct for data quality issues to promote better use of data for monitoring, planning and decision-making.

The following criteria can be used to prioritize the datasets to be cataloged:

- Type and the usability of the dataset (Is the data for external use or internal use ?, Is the dataset a welfare scheme/program dataset or is it a reference dataset [4]?);

- Ownership of data (Is the state/department owner of the data collected?, Is the dataset a part of a central scheme?) and,

- Level of data available (Aggregated or granular dataset)

Step 4: Compiling the information on the data catalog comprehensively with clear definitions

Before starting with the compilation of information, understanding who is the owner of each dataset, are there multiple departments who own a single dataset, or specific datasets owned by many departments helps coordinate for each dataset to be cataloged along with the partner line departments. Defining the roles of each of the owner, reviewer and approver helps standardize the accountability and define the intended use and scope of the data by the owners (who can help with identifying datasets, who can own the dataset other than the listed owners, and who can help with documentation). Then the process of compilation of all information can begin. The next step is to collate all the synthesized information in a standardized format. This documentation is a crucial step to enable and improve the use of the datasets.

Indicative list of resources that can be used to fill information into different sections:

- Department websites for comprehensive information on all schemes/programs

- Operational documents of the scheme/program

- Registration forms/performance under the scheme

- Manuals on the MIS linked to the program/scheme

- J-SON files for schemes and back-end forms for information about the data contents, frequency of updating information, access modalities

- Detailed interviews with IT head/officer that manages the data for the program for information on data contents, data architecture, data documentation & quality

- Interview/discussion with program officer for information on data collection process

Step 5: Ensuring data quality, completeness and authenticity checks are conducted

Once the MDC format is filled by an assigned staff member, the document must be reviewed to make sure that all basic information is filled in correctly, check for fields that are being recorded in the fields of the table, whether observations for a field are completely missing, are there any fields where more than a threshold percentage of the observations are missing, or whether the primary key is duplicated. There are several other quality checks that can be undertaken to ensure that the data contents are ready for any further analysis.

Step 6: Setting up protocols based on defined frequency of updating

The usual documentation process relies heavily on creating a one-time reference for datasets, but the efficacy of a metadata cataloging process relies on regularly updating the information, such that that it is relevant and ready for use at any given period of time. Once the above processes are undertaken, there is a need to establish clarity on how the catalog will be maintained and updated on a periodic or regular basis. Setting up protocols while looking at each dataset and its frequency at which it is updated and used is necessary to be clearly articulated when the catalog is being prepared. It is important to also update the catalog at least once a year as there may be changes to information systems, new data-sets could be added on or upgraded.

The purpose of the MDC is to facilitate greater data use with focus on inter-linkages and interoperability across departments. To this end, the MDC should be hosted on the department’s/ Ministry’s website or maintained by a central agency such as Planning or Economics & Statistics, or Information Technology departments. Selected fields of the catalog can be made viewable (publicly) and more detailed information through registered access (requiring login credentials) for those outside of the government. After operationalizing the above steps it is important to include protocols for sharing aggregated and non-aggregated but anonymized datasets with various partners, setting up standardized processes to make sharing of datasets secure, streamlined and sustainable. Please refer to the section on Data Sharing Standards for more details on the procedures for data exchange and external data use.

Footnotes

[4]4 Reference data is the data used to classify or categorize other data. For example, a dataset contains the inclusive criteria for a beneficiary by defining the cut-off age or weight, for enrolment in a particular scheme. Another operational dataset (such as ICDS), accesses the former dataset to track ongoing activities across all Anganwadis. Here the first dataset acts as a standard metric and is referred to as the reference dataset.